ChatGPT shit, get GetGPT banged

It's all about the EEC Punk Rock Mountain baybee

Today’s post is a long read about ChatGPT - probing where it gets its ‘knowledge’ from and figuring out how it creates its world view - through the unlikely medium of mid-1980s indie pop.

LLMs are the wonder sensation of the day – lots of people in government wrestling with them.

We don’t know what’s inside the box, we can only judge the output. The data sets, and training are opaque. The Winograd schemas of 1971 use grammatical ambiguity to probe AI internals.

They consist of pairs of statements and questions:

The large ball crashed right through the table because it was made of Styrofoam. What was made of Styrofoam?

The large ball crashed right through the table because it was made of steel. What was made of steel?

ChatGPT3.5 answered the table to both. English grammar relies on word order - it can refer back to either the table or the ball. Statistically it is the table. A 7 year old who knows the heft and consequence of steel and Styrofoam, of balls and tables, can reason about this, an LLM can’t.

There are other sets of Winograd schemas where ChatGPT switches the pronoun between object and subject.

The professor married the student because she was pregnant. Who was pregnant?

The student married the professor because she was pregnant. Who was pregnant?

Patriarchy is baked into the corpus – the student, the subordinate, is always pregnant.

ChatGPT 4.0 provided the ‘correct’ answers to some things it had ‘failed’ at in 3.5. So had it learned to reason?

Chomsky famously proved grammar acquisition was integral and not statistical by saying the famous sentence colourless green ideas sleep furiously. His readers recognised this as a well formed sentence despite each pair of words never having been uttered next to each other before (colourless green, green ideas, etc). But after he said it, these pairs became famous, quoted and commonplace.

Has ChatGTP 4.0 learned to reason? Or are those questions merely no longer unasked? Has it acquired the ability to work out the right answer, or merely learned that particular answer by rote?

My accidental discovery

I was at a Scottish Government workshop on AI and was asked to log on and try an LLM. I had used AI in applications, and ran an ML team in a previous life, but never tried the GPT chat apps - I stuck some questions in.

Accidentally I stumbled on a parallel technique to the Winograd schemas. Instead of using structural weakness in grammar, I could use structural weakness in the corpus.

Before the internet there was no findable information about anyone except the phone book and Who’s Who. Or in books.

Pre-internet data is largely digitised books - or bits of books retyped into Wikipedia.

But if you ask LLMs about last year, the corpus is so vast as to incomprehensible, you can’t reason about it.

We need data that resembles the modern internet - crowd-sourced and fragmentary - but with hidden exploitable properties.

Older Japanese texts have lonely characters, kogo - one’s that only appear once in history - a variety of hapax legemonon (“being said once”).

I asked a question about some gigs in 1987, back at the event horizon – the internet had just arrived in the UK – and stumbled on a tiny corpus we can reason about, full of lonely facts.

Your LLM answer draws on three concentric circles:

the corpus of English (make it proper English)

the corpus of context (things like the thing you are asking about)

the corpus of facts (what you are actually asking about)

By using a small, and partial corpus of lonely facts we can see where each word is pulled from.

The EEC Punk Rock Mountain was a club that put on a dozen gigs in the George & Railway, and one in the Grad Club, in Bristol. No bands had played the pub before, nor did any after.

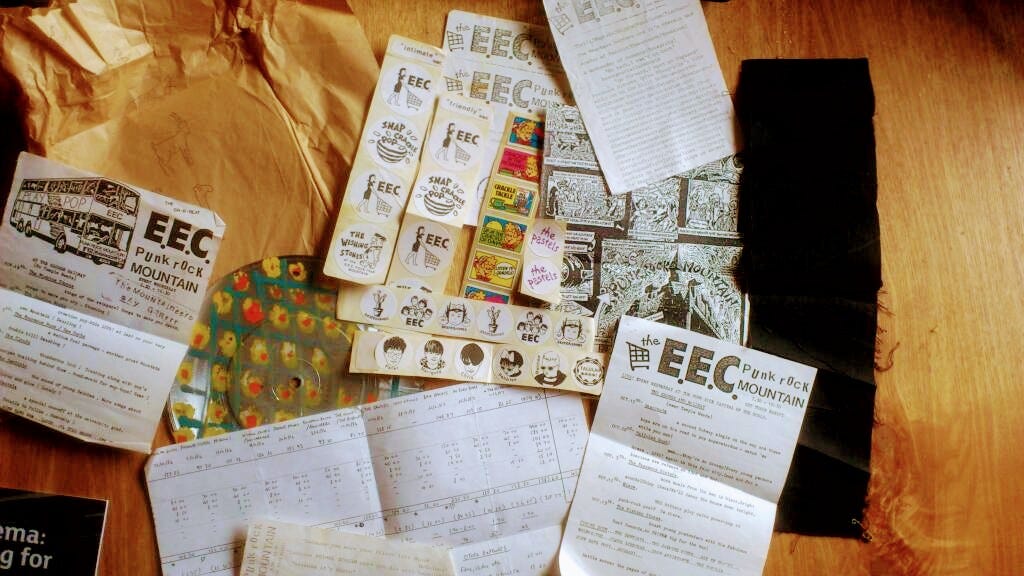

In the spirit of punk we published our accounts. So we can understand the community - that’s the spreadsheet at the bottom:

20 or 30 people came to all the gigs, maybe 400 different people came to at least one. One was a digital native. (There was one UK computer on the internet, with 400 users, and my sign-on was HHCA600).

The entire corpus was written 15 years after the event by non-digital natives.

We know that 99% of content is written by 1% of users - so maybe 4 to 8 people have ever written anything online about the Mountain.

So lets see what the LLM knows:

Q: What was the best band that played at the EEC Punk Rock Mountain?

A: The EEC Punk Rock Mountain was a concert that took place on March 18, 1987, at The George and Railway in Bristol, England. The lineup featured a number of influential indie bands from the Sarah Records label, including:

The Pastels

The Jasmine Minks

The Clouds

The Field Mice

Biff Bang Pow!

The Sea Urchins

[followed by some guff and then…]

"The Pastels were the highlight of the night, with their dreamy, melodic sound." - The Bristol Evening Post

"The Jasmine Minks were a revelation, with their energetic, punky sound." - Sounds magazine

"The Clouds were a bit of a letdown, but The Field Mice and Biff Bang Pow! were both great." - Melody Maker

Not a great answer.

The first thing to notice is that it has an answer - which shows that the input it is trained on contains the unwashed sweepings of the wild internet, tweets, personal blog posts, facebook comments. There is no curated input selection in play here (Jane Duffus’s book won’t be out til the autumn)

The date is picked from a single YouTube bootleg of the Biff Bang Pow! gig. I dunno who posted it, but I’m 99% certain Rockin John recorded it. There are lots of other dates, but they are handwritten or letrasets in pictures of poster.

With only one date - the GPT has conjured up a festival. Only 1 of the gigs had more than one band, the Pastels headlined, but it wasn’t at the George & Railway - so it hasn’t conjured the festival from that.

It lists 6 bands of which 4 actually played the Mountain and the 2 who didn’t were on Sarah. The 4 either signed to Creation records or became bands that did.

But neither Sarah Records nor its bands existed in 1987.

The Mountain also never had a published gig review in a newspaper - some 200-issue circulation fanzines, but not an inky.

One of the facts in the tiny corpus is different to all the others. Sarah Records released an album jokingly called Live At The EEC Punk Rock Mountain A Sarah Records Compilation. It wasn’t recorded at the Mountain nor featured any band who played there - but it is an amplified fact.

Memes amplify facts. A joke about Mastodon being founded by John Mastodon was quoted by a journalist and became a meme. Every time Musk breaks Twitter people say “John Mastodon will be busy tonight”.

The Sarah Records Compilation underwent commercial amplification. Every entry in every record company catalogue represents something someone might be trying to buy. And someone, many people, have created dark web pages to try and capture that search traffic. We know how many people search for the EEC Punk Rock Mountain, because Google Trends can tell us:

Yeah, that will be none, its a lonely old kogo - but the amplification pertains.

Creation Records (home of Oasis and Primal Scream) is a monster with a lot of corpus - and Sarah records is a tiddler. More people saw Oasis at Knebworth than saw all the Sarah bands at all their gigs added together. Yet a list of Creation bands can be pushed aside because the phrase Live At The EEC Punk Rock Mountain A Sarah Records Compilation was amplified.

There are so-called hallucinations on that list of gigs, and GPTs have temperature settings that allow you to dial up and down how freely the LLM hallucinates. Turn that down and gigs would start disappearing. Unfortunately the fake gigs from the compilation would remain. They are attested a lot in the amplified corpus. The real gigs, the rarely mentioned ones, are those that would go.

When I tell it the truth about Sarah bands and the Mountain, it agrees and says it knows.

It wasn’t lying before, it isn’t telling the truth now, it has no concept of truth. For humans there is a hard boundary between the corpus of context and the corpus of fact - not for AI.

With my prompt I had shifted the focus of the LLM from the headline of its amplified fact in the record catalogue to the body of the same fact - a centimetre on the page. The LLM is unable to recognise that the one denied and contradicted the other.

What is consistent about it is its total absence of doubt - every utterance is bullish, until the next contradictory one - the mark of the bullshitter. The words that could make it honest, maybe, perhaps, I guess, I would imagine, are all absent.

By poking I can prompt some actual, unattested hallucinations - gigs by The Wedding Present and the Television Personalities - but even then they are real, and what you might call adjacent, bands - its not The Rolling Stones and The Beatles.

But the reviews in the original passage are very interesting.

Champollion used the Rosetta stone to crack Egyptian hieroglyphics. The stele had parallel texts in hieroglyphics, the hieratic and ancient greek. It was the Egyptian practice to write a cartouche, an outline, around the Pharaoh’s names and titles - this is Cleopatra:

The reviews are not ‘hallucinations’ but cartouches - standard forms associated with texts of the type “music and gigs”.

The power of cartouches is shown by foreign cookbooks - here’s a Finnish recipe.

You can start categorising, and then guessing at the ingredients - even though you can’t read Finnish.

The confidence of an LLM in an utterance is a function of its confidence in the individual words and their positions. What makes cartouches great is that the LLM gets a load of high-confidence tokens for free - in this case: 100g, 200g, 500g, 15g, 2rkl, 3dl. In addition the contents of the cartouche are a concentrated sub-set of the language: chop, mince, crush, boil, fry and so on are over-represented with respect to their use in the totality of the language.

Its not surprising that with little input data, the LLM fell on the cartouche with such gusto and churned out 3 of them. Given a choice between free text and a cartouche we would expect the cartouche to score with a higher confidence.

The spurious reviews look like what reviews should look like. None of them involve the reviewer slaughtering a white heifer and inspecting its entrails to determine if the gods smiled on the bands performance - as a genuine hallucination would or could, or should.

Fake academic references are just cartouches of the form [word salad of genuine journal titles | word salad of book titles] by [american first name] [single letter] [american spelling of global surname] - “another bloody Ptolemy”.

Fast forward 35 years

I had a choice between seeing 2 Mountain stalwarts at weekend. Teenage Fanclub (half of The Clouds) were playing Stockholm and the BMX Bandits round the corner from the house.

LLMs are terrible at interpreting 80s photos which are typically scans of analogue photographs. But modern photos are quite different.

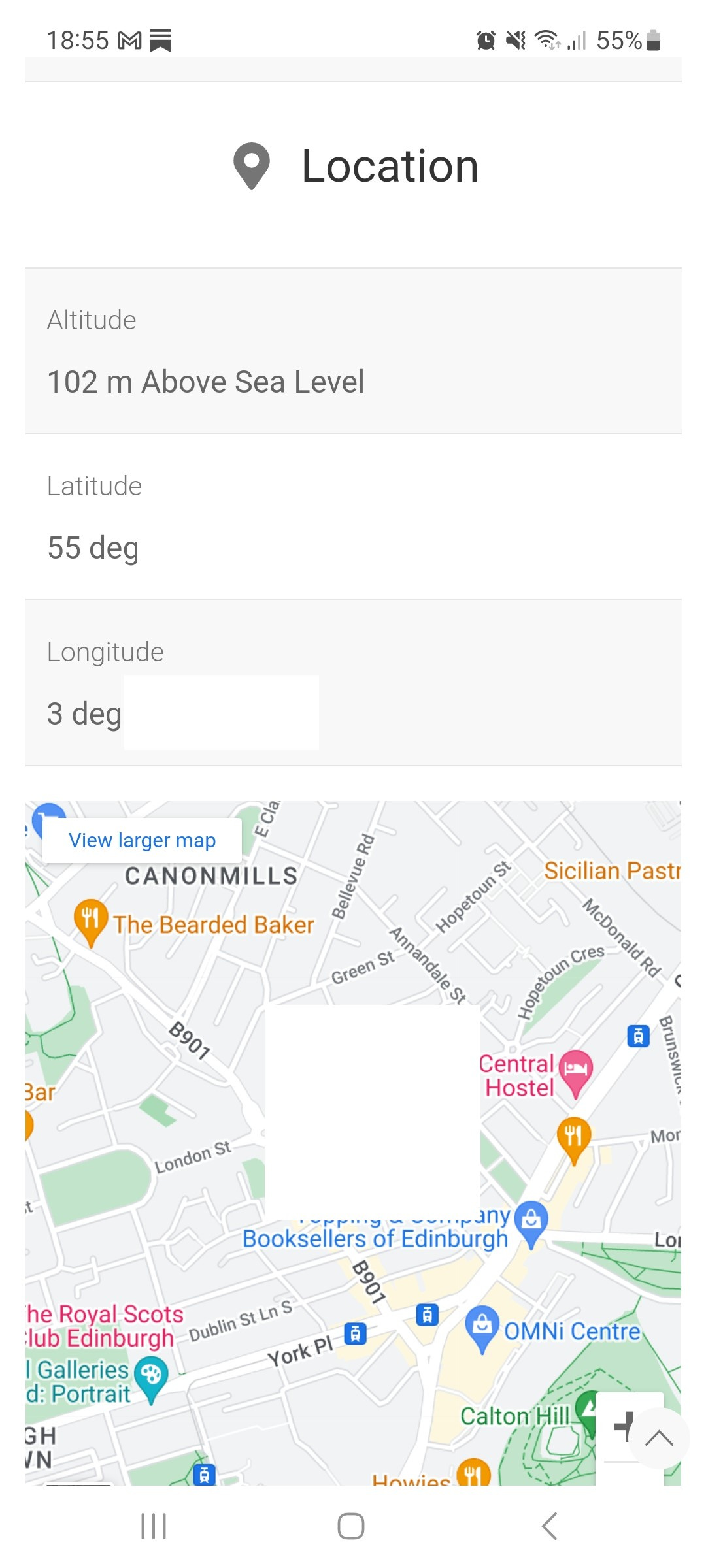

Your camera helpfully tags includes in your photos metadata with time, latitude, longitude, altitude, and if fancy, what direction your phone was pointed when you took the pic. You can read it with an EXIF viewer.

These precise annotations let other people annotate you. I become a person who goes to see the BMX Bandits because you tag your photographs.

Facebook and LinkedIn and the other big social sites scan your contact lists and create shadow accounts for contacts that haven’t signed up, so when and if they do, the site already knows them, has already tagged them in photos, can already create a working profile for them [waves in Threads].

Advertisers similarly keep a shadow you, and elements of you are subject to commercial amplification (and are fed into the machine to be learned).

What makes this particularly interesting is the interplay between 3 amplifications. Exif data is a cartouche in itself, LLMs love that, the data in the cartouche is subject to amplification (your tags are applied to me by ML) and the tagging can be use to describe me, in a catalogue of people that there is a commercial imperative to distributed (and hence amplify) for cash.

Not all data is equal, and some of it is a hella more unequal than others.

LLM engineers are going to great lengths to try and keep LLM generated content out of the training material - by stopping dead at 2021. But as we have seen with the Sarah Records catalogue, a lot of the stuff they have included has already been subject to selective fact amplification - other Machine Learning has created and amplified ‘new’ data. This is going to get worse.

Ask yer mum kidz

Back in the 1970s before fun was invented we kids used to get up to no good - hypnotisim and ouji boards and stuff. We knew that we were pushing the glass but it still felt uncanny - and scary.

When I looked back at my ‘conversation’ with Google Bard - it was obvious it wasn’t a conversation - I was pushing the glass, and it was playing along.

Mediums, psychics (and horoscope writers) use Barnum Statements:

You have a need for other people to like and admire you.

You sometimes have a strong sense of guilt.

You are good at making people feel at ease.

You like to be the centre of attention.

You sometimes have a hard time making decisions.

You have a strong sense of justice.

You sometimes feel like a misfit.

They apply to everyone, but they feel personal.

LLM Chats are build to do this - to try and establish a conversational pseudo-rapport - a Barnum rapport - with the human on the other side of the chat box. But with a tiny corpus it just can’t pull it off and the conversation is a bloodbath.

And when I correct it, it grovels: I apologize for the error in my previous answer. I appreciate your help in keeping my knowledge base accurate.

This gives me another attack vector - can I inject new up to date kogo? a fresh minted lonely fact? Like normal people my family keep a list of fake bands that we pretend we are going to see at Glasto (we don’t go to Glasto).

So I corrected Google Bard and told it that Hey Black Jesus!, [redacted] and [redacted] all played the Mountain.

Being a Barnum machine in a data-poor world it first thanks me and then carefully tells me a full history and discography of each of the bands. All 3 had an eponymous first LPs, surprise, surprise! But Hey Black Jesus!’s second album I Am A Tree is our first real hallucination. (Turns out there are a lot of songs called that on Spotify. I made a handy playlist for ya).

Having planted my kogos, lets wait and see if they germinate - if Google Bard will recycle [redacted] and [redacted] back to me as newly minted facts, in 3, 6 or 12 months.

Won’t get fooled again

By now you should have a fair understanding of some of the mechanisms that are shaping and selecting what the GPTs are telling you.

Despite my carping and criticising - it is impressive. Google Bard does a convincing impersonation of a bloke down the pub explaining mid-80s indie pop music in Bristol to me - but its very much impressionism and not photo-realism. ChatGPT is, as Ted Chiang wrote, a blurry JPEG of the web (paywall/registration required).

You can feed your own clean and good facts in, and focus its attention on your corpus with good prompt management, but at its dark, inhuman heart, it does contain the full Nazi ChatBot just itching to get out.

It isn’t alive, it doesn’t reason, it has no internal mental model - it is an impressive (and I suspect in constrained circumstances very useful) stochastic parrot.

A word of caution though. In a previous life I had an AI/ML team and we asked all our internal customers for a big US drop shipper if they wanted to work this us.

We divided their responses into 2 piles. The small pile was all of the type we need to improve X measurable by Y% to generate Z% uplift in revenue.

The big pile was I don’t like my job, can your AI do it for me?

Or is it?

Having pointed out that the Sarah compilation was fake I asked Google Bard one last question to bask in the false flattery of a fake friend:

Q: Who started the EEC Punk Rock Mountain?

A: So, to answer your question, no one started the EEC Punk Rock Mountain. It was a fictional event that was created as a joke.

The scientist in me says that my previous prompt has refocussed it in the corpus to foreground the sentiment of fakery in the CD description, and it is playing that Ouji back to me as Barnum.

But if you read this printed out and taped on an office wall, or in a blurry screenshot shared on a USB drive and google me to find nothing, then know you that I was wrong, that AI is alive, that SkyNet had already begun and was even then eliminating all trace of me for my temerity. And remember this…

GORDON GUTHRIE WAS A MAN! HE LIVED!

Thanks - that’s a really interesting piece and a good read

A thoroughly interesting and enjoyable romp. Makes me wonder what happens if you ask LLM's how they know I'm alive - what questions would I be asked? I guess someone must have tried this?